Abstract

Cixin Liu’s “Wandering Earth” is a more recent depiction of Earth being used as a Starship. Like Stanley Schmidt’s “Lifeboat Earth” (1976) it does seem like something of a rush job – the Sun was about to undergo the Helium Flash in Liu’s tale and – and so there was no time to create a way of illuminating the Earth for the Long Cold between stars. Here at Crowlspace I’m examining what a less hurried approach could look like.

Introduction

Earth travels around the Sun at 1/10,000th of lightspeed. The Sun travels around the Galaxy at about 0.08% of lightspeed. What if the Earth could travel, relative to the Sun and other nearby stars, at something a bit quicker? Like 0.2% of lightspeed? That allows travel at the rate of 500 years per light-year. Thus the 4 light-years to where Proxima Centauri will be will take about 2,000 years, as it’s moving towards the Sun at ~25 km/s presently.

Of course that’s long compared to a human lifetime and a long time compared to historical civilizations. For example, from the first Pharaoh, Narmer, the Egyptians managed to remain roughly the same for ~2,500 years, before the Romans took over and shipped all their nice limestone back to Rome to make concrete. Very quickly after that people forgot how to read Demotic and Hieroglyphs. That’s roughly the lifetime of a written language. Cuneiform remained constant for about 2,500 years as well, even though the spoken languages changed from the original Sumerian. Chinese ideographs are similarly venerable. Even Greek has managed a respectable 2,700 years.

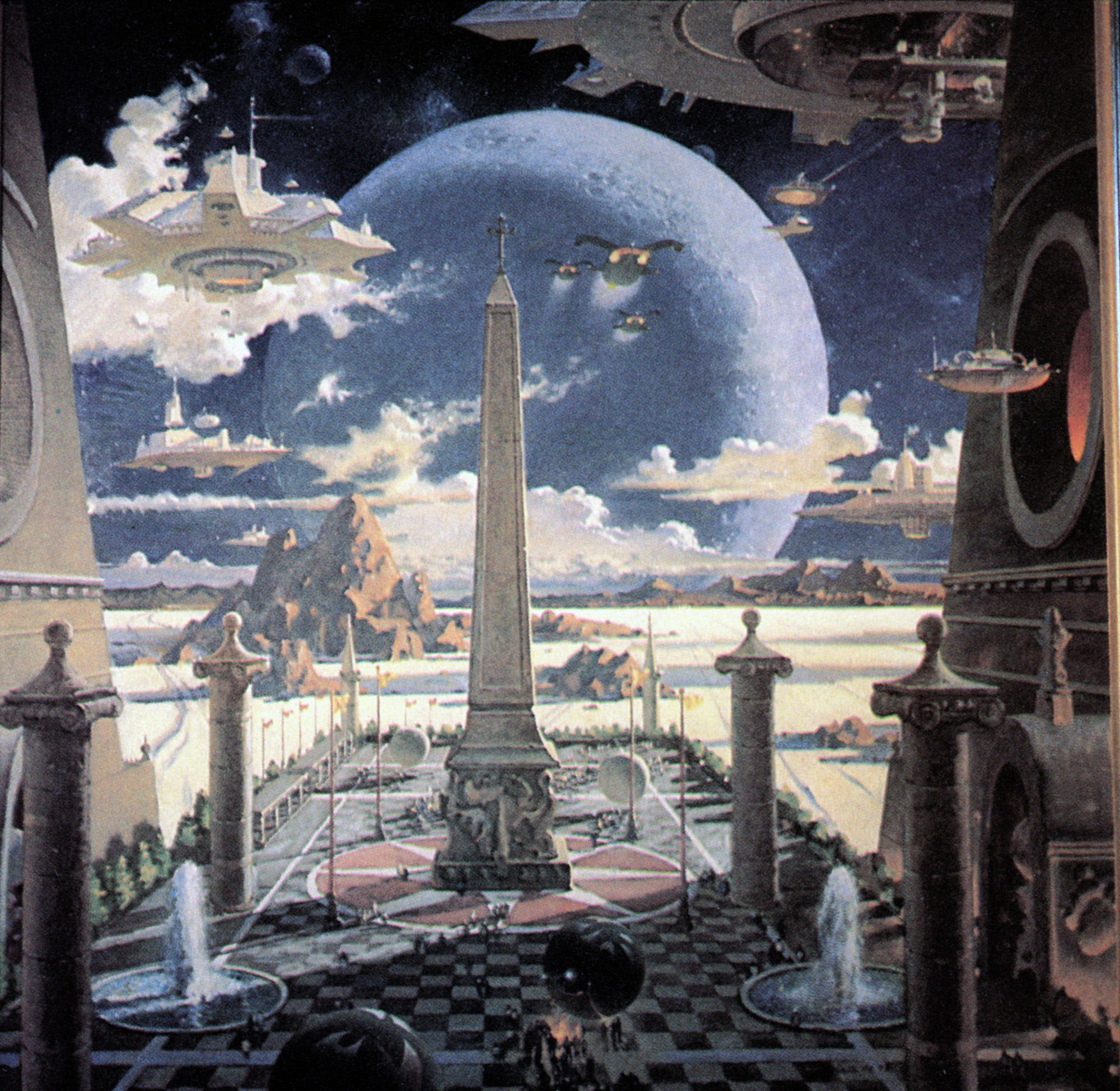

Olaf Stapledon imagined that Civilizations embarking on Interstellar travel via Mobile Planets would achieve a “Planetary Consciousness” in which everyone could communicate “mind to mind” (whether via technology or telepathy he’s a bit vague) and develop a long-view of history. Of course he also imagined that longevity would allow individuals to live for millennia and thus Civilizations could take an even longer view of future time.

Stapledon’s “Star Maker” (1937) is available via Project Gutenberg here: Star Maker, by Olaf Stapledon

Stapledon’s discussion of “Artificial Planets” is derived from J.D.Bernal’s little book “The World, The Flesh, And the Devil” (1929), here:

The World, the Flesh & the Devil An Enquiry into the Future of the Three Enemies of the Rational Soul

J.D.Bernal was an early Crystallographer and thus on the cutting edge of science at the time. He had some unique opinions. The section of interest is here: The World. It reads much like O’Neill’s discussion in the 1970’s or just about anything in the current Space Colonist community. Bernal assumed zero-gee environments, which is the one difference from most modern ideas.

Quoting “Star Maker”:

Actual interstellar voyaging was first effected by detaching a planet from its natural orbit by a series of well-timed and well-placed rocket impulsions, and thus projecting it into outer space at a speed far greater than the normal planetary and stellar speeds. Something more than this was necessary, since life on a sunless planet would have been impossible. For short interstellar voyages the difficulty was sometimes overcome by the generation of sub-atomic energy from the planet’s own substance; but for longer voyages, lasting for many thousands of years, the only method was to form a small artificial sun, and project it into space as a blazing satellite of the living world. For this purpose an uninhabited planet would be brought into proximity with the home planet to form a binary system. A mechanism would then be contrived for the controlled disintegration of the atoms of the lifeless planet, to provide a constant source of light and heat. The two bodies, revolving round one another, would be launched among the stars.

Translating to modern terminology, the propulsion energy comes from mass-annihilation. Given the various conservation laws, there’s one way to convert baryons into energy in the absence of equal amounts of antimatter, and that’s via the Sphaleron reactions allowed by the Standard Model. It’s possible that a particular annihilation process exists which coverts baryons into neutrinos, rather than pesky gamma-rays. This would allow an “apparently reactionless drive” (ARD) since it would produce no drive plume in the Interstellar Medium (ISM). Cosmologist Frank Tipler, who will be at 2023’s IRG Interstellar Symposium in Montreal, has proposed such a drive as did Robert L Forward before him in the 1990’s. It’s possible that some of the UAP’s recently revealed by the DoD/NASA task-force use such ARD’s. Would certainly make sense of some of the insane maneuvering capabilities.

Such a drive would allow Earth to be pushed to 0.002 c by using about 0.2% of its rest-mass, with another 0.2% expended to decelerate at destination. The Moon, for comparison, masses 1.23% of the Earth. Given a reasonable reluctance to use the Earth’s mass for an interstellar journey, using the Moon makes more sense. Additionally it can be deployed as a gravitational tractor, drawing the Earth towards it via gravity, balancing the acceleration of the Earth-Moon system. To accelerate the Earth-Moon to 600 km/s in about 500 years requires initially 2.3E+20 newtons thrust, meaning the Moon has to be moved much closer to the Earth. A bit over 3 times the Earth’s radius. The Moon would fill most of sky on the hemisphere facing it.

The ARD would need to be annihilating almost 800 million tonnes per second. Due to the decline in the Moon’s mass the Moon would get lower in the sky to 2.93 Earth radii by the end of the acceleration phase, and 2.7 Earth radii by the end of the deceleration phase. Stapledon’s Interstellar travellers eventually swapped moving natural planets for mobile artificial worlds able to cruise at half lightspeed, criss-crossing the Galaxy. But where is Starship Earth going? And why?

Choice of Destination

The usual motivation for moving the Earth to another star is to escape the death of the Sun. Moving to Proxima Centauri, for example, would gain Earth’s biosphere several trillion years of a steady-state Main Sequence star. But that’s not the maximum available to a living worlds if there’s energy sources other than stars. Nuclear fusion converts, at most, 0.9% of mass into energy. Accreting black holes can get higher conversion fractions, several percent. But baryons and electrons are but 5% of the mass content of the Cosmos. There’s about 5 times as much in the form of Dark Matter, and almost three times that in the form of “Dark Energy”. If those can be efficiently harvested, then the lifespan of biospheres can be extended perhaps a trillion fold the lifespan offered by stars alone.

However the fate of the Milky Way and its Local Group of Galaxies may not be hospitable to the very long lifespan of Dark Matter/Energy powered Life. So where to go? In a sequel I’ll examine the question further.